RAG Support in Llama Assistant

We're thrilled to announce the latest release of Llama Assistant, now featuring powerful RAG (Retrieval-Augmented Generation) capabilities through LlamaIndex (opens in a new tab) integration. This major update brings enhanced context-awareness and more accurate responses to your conversations.

RAG technology allows Llama Assistant to dynamically retrieve relevant information from your documents and data sources to provide more informed and accurate responses. By combining the power of large language models with real-time information retrieval, RAG enables the assistant to:

- Access and reference your specific documents and knowledge bases

- Provide responses grounded in your data rather than just general knowledge

- Maintain up-to-date information by pulling from your latest documents

Getting Started with RAG

To use RAG capabilities in Llama Assistant:

- Prepare your documents in supported formats (PDF, TXT, DOCX, etc.)

- Drag and drop your documents into the assistant

- Start asking questions - the assistant will now incorporate your documents into its responses

Changes in v0.1.40 (opens in a new tab) which supports RAG

- 💬 Supported continue conversation: The assistant now maintains context across multiple exchanges, enabling more natural and coherent conversations.

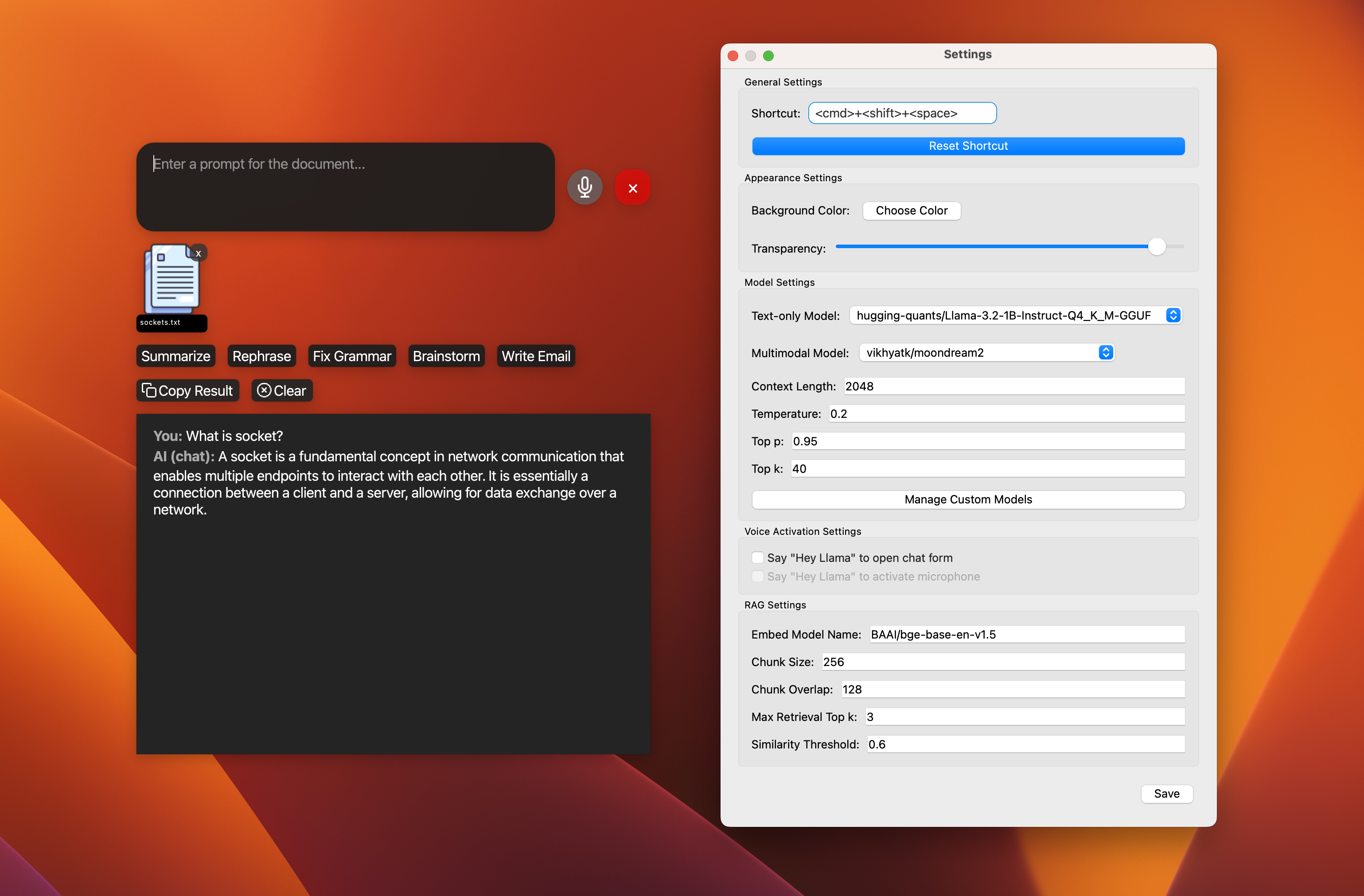

- 🔍 Added RAG support with LlamaIndex: Integrate your own documents and knowledge bases to get responses tailored to your specific information needs.

- ⚙️ Added model settings: Customize the behavior and performance of the AI model to match your requirements.

- 📝 Added markdown support: Enjoy rich text formatting in both your inputs and the assistant's responses.

- ⌛ Added loading text animation while downloading the models and generating answers: Better visual feedback during processing.

- 🔄 Fixed chatbox scrolling issue: Improved user experience with smoother conversation flow.