Supported Models

Llama Assistant supports various AI models to power its capabilities. These models can be run locally on your machine, ensuring privacy and offline functionality.

Text Models

Llama Assistant currently supports the following text-based language models:

🦙 Llama 3.2

- Developed by Meta AI Research

- Sizes: 1B and 3B parameters

- Quantization: 4-bit and 8-bit options available

- Capabilities: General language understanding and generation

- Model Link (opens in a new tab)

🐧 Qwen2.5-0.5B-Instruct

- Developed by Alibaba Cloud

- Size: 0.5B parameters

- Quantization: 4-bit

- Capabilities: Instruction following, task completion

- Model Link (opens in a new tab)

Multimodal Models

For tasks involving both text and images, Llama Assistant supports these multimodal models:

🌙 Moondream2

- Developed by Vikhyat Korrapati

- Model Link (opens in a new tab)

🖼️ LLaVA 1.5/1.6

- Developed by Haotian Liu*, Chunyuan Li*, Qingyang Wu, Yong Jae Lee.

- Model Link (opens in a new tab)

🖼️ MiniCPM-V-2.6

- Developed by OpenBMB

- Model Link (opens in a new tab)

Using Models

Llama Assistant automatically downloads and manages the appropriate model based on your task and system capabilities. You can specify your preferred model in the settings if desired.

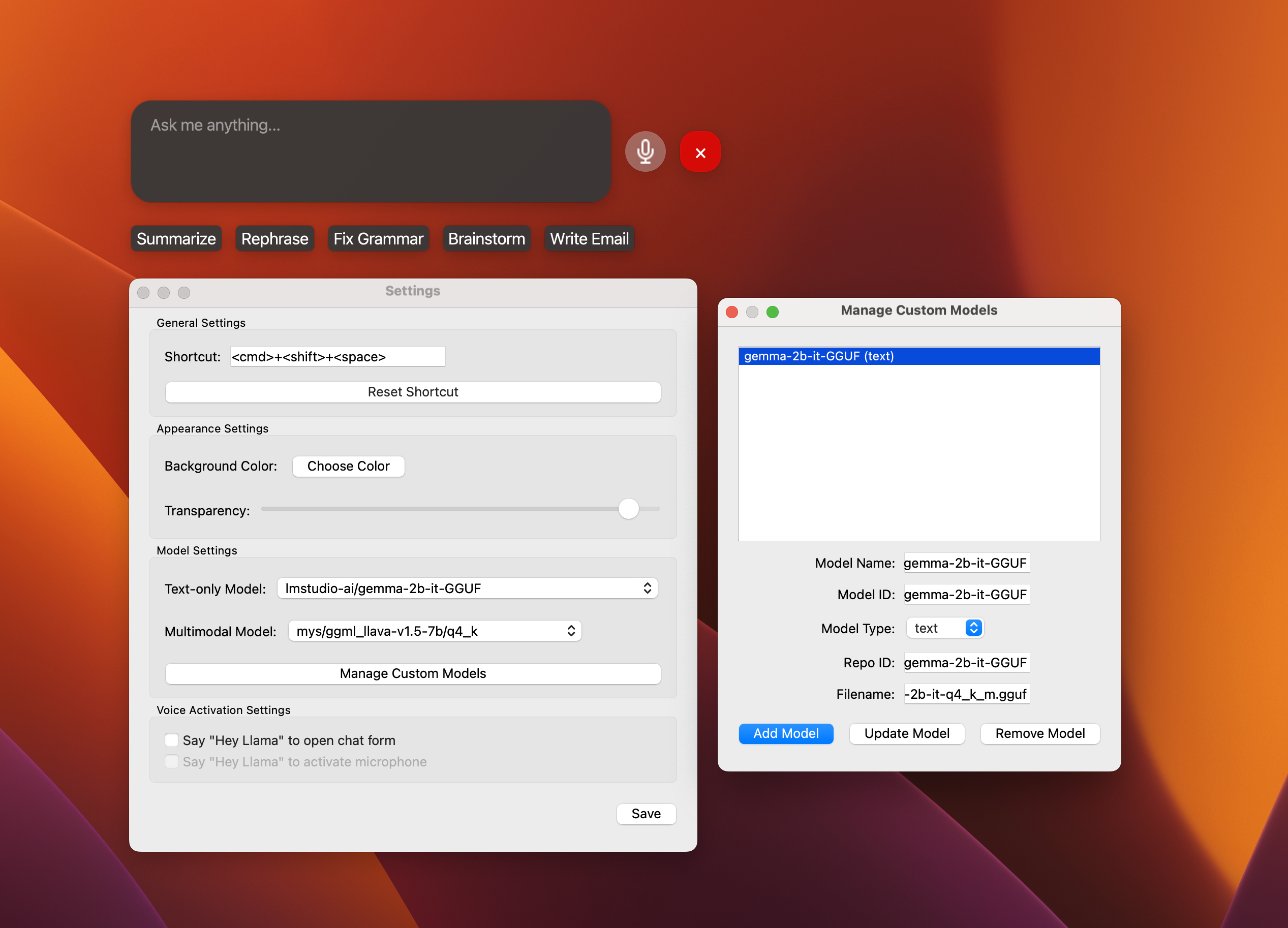

Custom models

You can add a model support just by using the settings in the app. You can add a model from Hugging Face or a custom model by providing the model's URL.

The list of supported models will be updated regularly to include new models and capabilities. The model list in LlamaCPP (opens in a new tab) can be used as a reference for the supported models.

Future Model Support

We are actively working on expanding our model support. Planned additions include:

- 📚 More text-only models (targeting 5 additional options)

- 🖼️ More multimodal models (targeting 5 additional options)

- 🎙️ Offline speech-to-text models

Check our GitHub repository (opens in a new tab) for the latest updates on supported models and features.